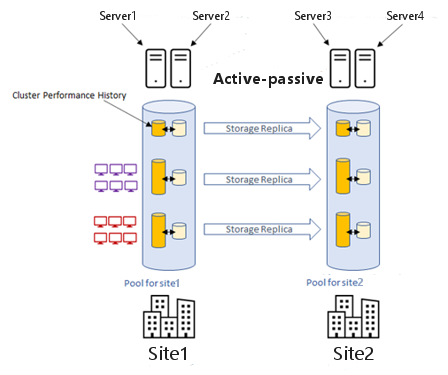

Imagine a world where a hardware failure or natural disaster cripples your business-critical applications. Shudders, right? Thankfully, Azure Stack HCI offers a built-in hero: Storage Replica. This disaster recovery (DR) technology empowers you to create synchronous or asynchronous copies of your storage volumes across geographically dispersed sites. Let’s delve into the world of Azure Stack HCI Storage Replica, equipping you to safeguard your valuable data.

Understanding Storage Replica: Replication Magic

At its core, Storage Replica facilitates volume replication between servers or clusters. This enables automatic failover of virtual machines (VMs) in case of a disaster at the primary site. Here are the two main replication options Storage Replica offers:

- Synchronous Replication: This real-time mirroring ensures data consistency at both sites. Every write operation at the primary site is immediately replicated to the secondary site, offering minimal data loss potential (perfect for critical applications). However, this approach requires a low-latency network for optimal performance.

- Asynchronous Replication: This method allows for some lag between primary and secondary site data. While offering greater flexibility (suitable for geographically dispersed sites), asynchronous replication introduces the possibility of some data loss during a disaster (depending on the configured replication interval).

Benefits of Utilizing Storage Replica

The advantages of leveraging Storage Replica in your Azure Stack HCI environment are undeniable:

- Disaster Recovery: The primary benefit is robust disaster recovery. In the event of a disaster at the primary site, VMs can be seamlessly failed over to the secondary site, minimizing downtime and data loss.

- Improved Business Continuity: By ensuring application availability, Storage Replica safeguards your business continuity. Critical operations can resume quickly, minimizing disruptions and potential financial losses.

- Scalability and Flexibility: Storage Replica caters to diverse deployment needs. Synchronous replication provides high availability for critical workloads, while asynchronous replication offers flexibility for geographically distant sites.

Planning and Implementing Storage Replica

Before deploying Storage Replica, some planning is crucial:

- Identify Critical VMs: Prioritize the VMs that require replication for optimal disaster recovery.

- Network Assessment: Evaluate your network latency and bandwidth to determine the suitability of synchronous or asynchronous replication.

- Storage Sizing: Factor in the additional storage requirements for replica volumes at the secondary site.

Implementation Tools:

There are two primary tools for implementing Storage Replica:

- Windows Admin Center: This user-friendly interface provides a visual representation of your Storage Replica environment. You can create and manage Storage Replica groups, volumes, and monitor their health status.

- PowerShell Cmdlets: For granular control and automation, PowerShell offers a robust set of cmdlets for Storage Replica configuration, monitoring, and failover operations. Here are some key cmdlets to get you started:

- New-SRGroup: Creates a new Storage Replica group, specifying the primary and secondary servers/clusters.

- Add-SRVolume: Adds volumes to the Storage Replica group for replication.

- Set-SRPartnership: Configures replication settings like synchronous/asynchronous mode and replication interval.

- Start-SRReplication: Initiates replication between the primary and secondary sites.

- Get-SRGroup: Retrieves information about the health and status of your Storage Replica groups.

Implementation Steps:

- Create a Storage Replica Group: Use Windows Admin Center or the

New-SRGroupcmdlet to define the primary and secondary servers/clusters involved in replication. - Select Volumes for Replication: Identify the volumes containing the critical VM data using Windows Admin Center or the

Add-SRVolumecmdlet. - Configure Replication Settings: Choose the replication mode (synchronous or asynchronous) using Windows Admin Center or the

Set-SRPartnershipcmdlet. For asynchronous replication, set the replication interval to determine how often updates are sent to the secondary site. - Enable Replication: Once configured, initiate replication using Windows Admin Center or the

Start-SRReplicationcmdlet to begin mirroring data to the secondary site.

Day-to-Day Operations and Monitoring

Storage Replica offers robust management and monitoring capabilities to ensure the health and effectiveness of your DR solution:

- Windows Admin Center: Provides a centralized view of your Storage Replica environment, allowing you to monitor replication health, view detailed information about volumes and groups, and perform basic management tasks.

- PowerShell Cmdlets: Offer extensive control for monitoring and managing replication. You can use cmdlets like

Get-SRGroupto retrieve detailed information about replication status, identify errors, and troubleshoot issues. - Azure Monitor Integration: Integrate Storage Replica with Azure Monitor for in-depth insights. Set up alerts to be notified of potential issues like replication failures or performance bottlenecks.dd

Testing Failover: Practice Makes Perfect

While prevention is key, testing your disaster recovery plan is crucial. Here’s how to test failover functionality with Storage Replica:

- Planned Failover: Perform a planned failover to the secondary site during a maintenance window. This allows you to validate the failover process and ensure VMs can be successfully activated at the secondary site. You can initiate a planned failover using Windows Admin Center or the

Invoke-SRFailoverPowerShell cmdlet. - Test Failover with Hyper-V Replica: If you’re using Storage Replica in conjunction with Hyper-V Replica for VM replication, conduct a combined test failover to simulate a complete disaster scenario.

Conclusion: Building a Resilient Azure Stack HCI Environment

By leveraging Storage Replica’s capabilities, you can create a robust disaster recovery strategy for your Azure Stack HCI environment. With planned replication, comprehensive monitoring, and regular testing, you’ll ensure business continuity and minimize the impact of potential disasters. Remember, data protection is paramount, and Storage Replica empowers you to become the guardian of your critical information.

Additional Considerations

- Security: Implement robust security measures at both primary and secondary sites to safeguard your replicated data. This includes encryption for data at rest and in transit.

- Network Bandwidth: Monitor network bandwidth consumption, especially during initial replication and failover events. Ensure your network has sufficient capacity to handle replication traffic without impacting production workloads.

- Resource Allocation: Factor in the additional storage and compute resources required at the secondary site to accommodate replicated VMs during a failover event.

By following these guidelines and leveraging the power of Storage Replica, you can build a highly available and disaster-resistant Azure Stack HCI environment that keeps your business applications running smoothly, no matter what challenges arise.